By: Jimmy Parkin, Sound Engineer, NEP UK Broadcast Services

Even those with only a passing interest in tennis understand the scale of the achievement that is winning Wimbledon. But there’s also the very significant scale of the broadcast operation that brings coverage to the world. There’s been quite a lot written about the Wimbledon Tennis Championships this year from a broadcast infrastructure perspective, but no real detail about the audio side of things. Here we’ll explore how the audio works, the challenges and how they were overcome.

For those not familiar with the changes, let’s recap. This year’s event marks the debut of Wimbledon Broadcast Services (WBS), which has taken over from the BBC as the new host broadcast operation of the All England Lawn Tennis Club (AELTC). NEP UK has been working with Wimbledon for 35 years, and this year delivered IP technical facilities for its in-house production as part of Wimbledon Broadcast Services.

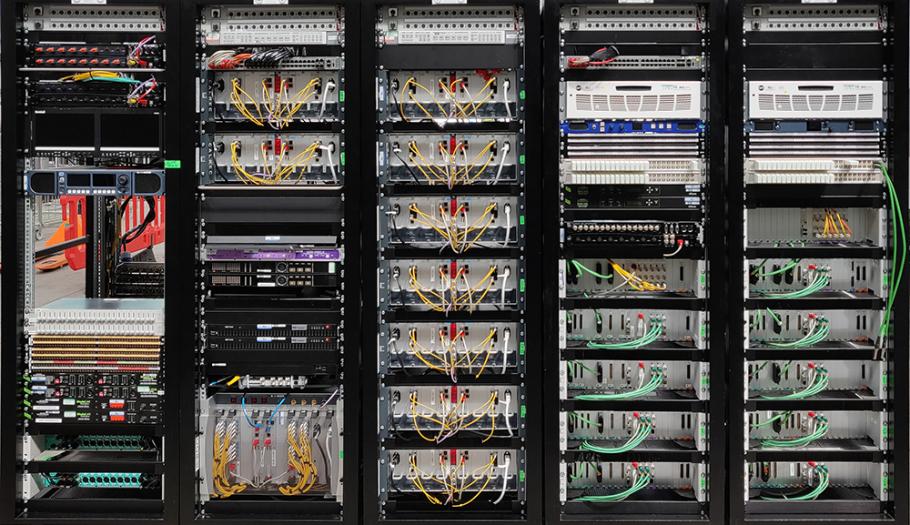

Since 2014, NEP UK has provided both host and domestic coverage of the event. This summer, it created 11 (de-rig) control rooms for the host broadcaster plus three for the Wimbledon Channel to serve the world feed and archive. To support the broadcast, NEP employed 118+ camera positions and 41+ EVS servers, plus three major OB units and a large, fly-pack core to broadcast the event.

On the video side, the facility was IP-based, using SMPTE ST2110-compliant technology. Content within the facility was distributed directly to rights holder MCRs, the World Feed, the Wimbledon Channel, the Central Content Store and a transmission/QC area.

What about the audio then? The central aim was to reduce the physical infrastructure that had to be installed while providing as much audio I/O as possible across courts 3 to 18. Centre Court, Court 1 and Court 2 were standalone islands that were serviced by an OB unit each. Centre Court and Court 1 benefitted from our new, NEP UK IP-based trucks called Venus and Ceres. For Court 2 we used a slightly older OB unit. All three trucks have Calrec consoles.

We unveiled these two new SMPTE ST2110-compliant trucks earlier this year. We took the opportunity to invest in future-resistant IP-capable trucks. The ST 2110 system infrastructure is identical in both vehicles. Each system is built around Grass Valley (formerly SAM) IQ UCP 25GbE Gateway cards, which provide two-way links between the all-new robust and resilient IP-based equipment and the existing baseband technology that is still needed to accommodate clients using SDI feeds. The trucks can also offer dual level UHD and HDI-SDI simultaneously.

Venus and Ceres are also equipped with PHABRIX’s HDR and IP-enabled test and measurement solutions. This includes three Qx 12G signal generation, analysis and monitoring solutions, to accommodate clients regardless of whether they are using SDI or IP feeds. NEP also invested in four Rx2000 units, with each Rx providing up to 4 channels of 2K/3G/HD/SD-SDI video/audio analysis and monitoring (dual inputs per analyser).

The other major differentiator for these vehicles is the significant reduction in cabling; the system requires far less fibre optic cable compared to the miles of coaxial cable previously required, which proves quicker to integrate and is much lighter. The new equipment requires greater cooling; therefore, the truck design takes into account the ability to provide greater air conditioning and all equipment can be cooled separately in operational areas.

Venus and Ceres can also expand their capacity and facilities exponentially via modular connection with multiple IP flypacks.

Venus is equipped with a Calrec Apollo and Ceres with a Calrec Artemis working alongside Axon Glue (embedders and Dolby E creation), RTS Telex Comms System with KP5032 and KP4016 comms panels and TAIT Radio Talkback base stations. Both are Dolby Atmos-ready, using Genelec 8351APM and 8331APM for main monitoring. Other audio monitoring includes TSL PAM-2, TSL Solo Dante and Wholer AMP-16. Audio glue includes TSL X-1 for up and down mixing and TSL Soundfield decoders with reverb from Bricasti and Yamaha.

In terms of planning the audio for WBS, this was based on information from the NEP UK Technical Manager on this project who worked closely with WBS on requirements. While not specified in those requirements, years of experience and onsite expertise lead us down the path of a highly advanced networked audio solution.

I know these words tend towards cliché these days, but easy scalability and network flexibility were key to the success of this project. Audio operators had to be able to get whatever source they needed from whichever court without having to physically move or plug anything in. It’s why we opted for Calrec and its (non-IP) Hydra2 networking capabilities. It’s Hydra2 – and Calrec’s H2O GUI – that underpinned all of this. The key point is that any console can “talk” to any other console on this network.

In terms of preparation, Calrec’s H20 (Hydra2 Organiser) meant that port labels and naming could be carried out offsite before hardware was connected and this could then be imported onto the live network. This allowed for some pre-configuration work to be achieved before all the network was built. It was obviously impossible to build the entire network offsite during prep time, so this helped massively.

Heavy use of Hydra Patch bays – virtual patch points within the Calrec Network – meant that hardware IDs and card layouts of modular IO frames were also not needed to be known before arriving onsite, which was a major help when hiring in extra Calrec IOs.

External to that, we connected to the overall host broadcaster infrastructure using MADI. We believe that it’s the greatest number of Calrec consoles that the company has seen on a de-rig network, with 11 across it in total. At this point we need to separate out the BBC as although it was no longer the host broadcaster (rather a rights holder), it did have some understandable special privileges. It also used Calrec consoles via its OB supplier.

Our OB trucks were connected to the Hydra2 network via MADI – they didn’t need the backbone interconnectivity. Both Centre Court and Court One are separate buildings, which obviously isn’t the case with the outside courts. In addition, a Calrec Brio was used for the media facilities; press conferences etc.

As well as the flexibility to access whatever audio was required, the other core benefit of this network architecture was its inherent redundancy and that was, of course, vital. Everything has both primary and secondary connections. Once it was on the Hydra2 network, it was dual-pathed, where MADI suffers from single points of failure.

There was also the ability to share the inputs of I/O boxes. For example, Number Three Court Commentary had I/Ps shared amongst many users (Number Three Court, Wimbledon Radio, Centre Court and some beauty mics). In a usual setup, the amount of differing I/O boxes in that area would be at least four, whereas now only one I/O box was needed.

As mentioned, the video was IP-based (SMPTE ST2110). We interfaced with the IP video network via 32 Grass Valley MADI inputs (four of their cards). We co-existed as two separate routing infrastructures, joined via MADI.

The console surfaces were in Grass Valley production control rooms, very close to the MCR, where the heart of the Hydra2 routing sat. There were 37 I/O boxes on the de-rig side (18 court side for mic inputs and the rest in the MCR for monitoring/interfacing) with a total of over 200 mics. All courts were 5.1 surround.

The big pressure for us came in the first week where all the courts were covered with a minimum of three cameras. Centre Court and Courts 1, 2 and 3 all had dedicated consoles. From court 4 onwards, two courts were mixed per console. In the second week, the number of courts being used was reduced for obvious reasons.

The Wimbledon Channel, from an audio perspective, was another Calrec desk that was attached to the Hydra2 network. It enabled Wimbledon Channel to get all the court feeds directly as well as all the effects mics; whatever they wanted. The way we thought about it was that it was simply another production facility sat on our network.

In terms of commentators, Centre, 1, 2 and 3 had dedicated boxes overlooking play. There are another three commentator boxes looking over courts 12, 14 and 18 respectively and then we had an additional four off-tubes, which were switched as required between the remaining courts. We didn’t offer commentary on every game all the time; it was decided on merit, both in terms of which games needed to be live and/or archived with commentary.

The biggest challenge was the sheer number of feeds and productions running. Because of the way that we designed the network, adding I/O boxes and other additional infrastructure was really easy. That’s the beauty of what Calrec has designed and what we implemented. It was so scalable; the way resources appeared on the network and how easy it was to move them across the network was a dream. In terms of planning, it was about making sure there was enough capacity and flexibility.

On a single fibre with Calrec’s Hydra2 network we could get 512 mono channels, which is fantastic compared to MADI. We weren’t restricted by cable infrastructure. The media facilities were quite a distance away in another building but the way this was architected meant that was easily accommodated, which was brilliant.

Lastly, we used IP (Dante) to make the commentary work because this prevented cabling issues and allowed us to use one-person comm boxes (Glensound Inferno). This is preferable for sports broadcasting, especially on Centre Court and Court 1, where there were three commentary positions; a box-per-commentator is easier and more manageable in the room, where table space is at a premium and a large, multi-person comm box would have been too big and unwieldy. The ease of the way Calrec’s cards handled this interfacing and the fact that they were so resilient, meant this was a plug-and-play operation to connect all the commentary feeds. It was a big Dante network but it really was a very easy integration.

Again, without wishing to stray into cliché, very little went wrong at all. This was helped by the fact we retained ongoing knowledge of the job as a whole and we have a great deal of experience using Calrec networking on other large jobs in the lead up to this event.

While this was clearly a complex project, we achieved everything that we set out to, following a clear design brief and using technology that did exactly what it was claimed to do.

This article first appeared in Resolution issue V17.6